Ever wanted that your config files in you docker-stack updated also when you released a new version of your stack through a CI/CD pipeline? Or you where in a development phase where you are constantly changing stuff in the config but you also wanted to make sure that you knew which config was used in what release in the pipeline? Well… Maybe you should try the next trick:

variables:

HOSTNAME: dockerhost.tuxito.be #Host to deploy docker-compose

Clone or Pull Repo to Remote Host

clone:

image: alpine

before_script:

- apk add openssh-client git jq curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY_ANSIBLE" | tr -d '\r' | ssh-add -

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

- /usr/bin/git config --global user.email "$GITLAB_USER_EMAIL"

- /usr/bin/git config --global user.name "$GITLAB_USER_LOGIN"

- GIT_CLONE_URL="$(curl --silent -XGET "$CI_SERVER_URL/api/v4/projects/$CI_PROJECT_ID?private_token=$ANSIBLE_TOKEN" | jq -r '.ssh_url_to_repo')"

script:

- ssh -o StrictHostKeyChecking=no ansible@$HOSTNAME "printf '%s\n %s\n' 'Host *' 'StrictHostKeyChecking no' > ~/.ssh/config && chmod 600 ~/.ssh/config"

- ssh -o StrictHostKeyChecking=no ansible@$HOSTNAME "if [ -d "$CI_PROJECT_NAME" ]; then (rm -rf $CI_PROJECT_NAME; git clone $GIT_CLONE_URL); else git clone $GIT_CLONE_URL; fi"

Deploy stack

deploy:

image: alpine

before_script:

- apk add openssh-client git jq curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY_ANSIBLE" | tr -d '\r' | ssh-add -

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

script:

- ssh -o StrictHostKeyChecking=no ansible@$HOSTNAME "sed -i -E "/CONF_VERSION=/s/=.*/=$CI_JOB_ID/" $CI_PROJECT_NAME/deploy.sh"

- ssh -o StrictHostKeyChecking=no ansible@$HOSTNAME "cd $CI_PROJECT_NAME; chmod +x deploy.sh; sudo ./deploy.sh"I’m using a gitlab-ci pipeline file here.. But the eventual purpose can be achieved using all Ci/Cd tools. It is just a matter of changing the Variable name.

More importantly this line:

ssh -o StrictHostKeyChecking=no ansible@$HOSTNAME "sed -i -E "/CONF_VERSION=/s/=.*/=$CI_JOB_ID/" $CI_PROJECT_NAME/deploy.sh"Here you wil find the ENVIRONMENT value used in the upcoming docker-compose file CONF_VERSION. Which on his term has the value of the $CI_JOB_ID which is a baked in value of Gitlab:

CI_JOB_ID

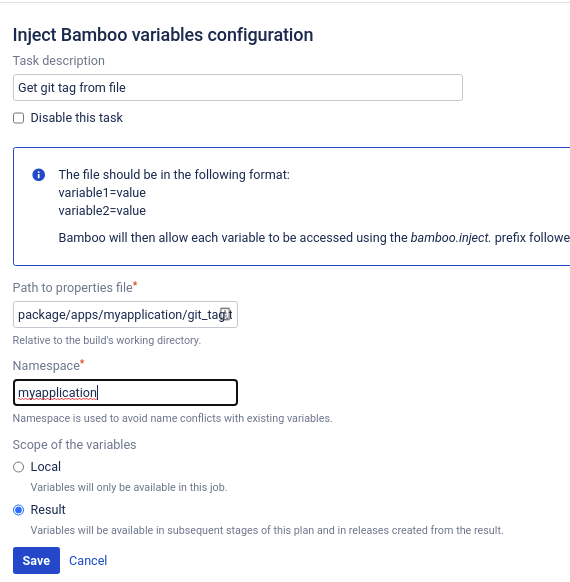

The unique ID of the current job that GitLab CI/CD uses internally.If you where using Bamboo for example you could use bamboo.buildNumber.

Then for the trick in your docker-compose file:

version: "3.7"

services:

elasticsearch:

image: elasticsearch:${ELASTIC_VERSION}

hostname: elasticsearch

environment:

- "discovery.type=single-node"

- "xpack.monitoring.collection.enabled=true"

ports:

- 9200:9200

- 9300:9300

networks:

- elastic

volumes:

- type: volume

source: elasticsearch-data

target: /usr/share/elasticsearch/data

- type: volume

source: snapshots

target: /snapshots

deploy:

mode: replicated

replicas: 1

placement:

constraints: [node.hostname == morsuv1416.agfa.be]

secrets:

- source: elasticsearch-config

target: /usr/share/elasticsearch/config/elasticsearch.yml

mode: 0644

uid: "1000"

gid: "1000"

filebeat:

image: docker.elastic.co/beats/filebeat:${ELASTIC_VERSION}

hostname: "{{.Node.Hostname}}-filebeat"

ports:

- "5066:5066"

user: root

networks:

- elastic

secrets:

- source: filebeat-config

target: /usr/share/filebeat/filebeat.yml

volumes:

- filebeat:/usr/share/filebeat/data

- /var/run/docker.sock:/var/run/docker.sock

- ...

secrets:

elasticsearch-config:

file: configs/elasticsearch.yml

name: elasticsearch-config-v${CONF_VERSION}

filebeat-config:

file: configs/filebeat.yml

name: filebeat-config-v${CONF_VERSION}As you can see… The name in docker stack will change but there reference to the secret (aka Config file) will be the same in docker-compose.yml.

So all there is left in the deploy.sh script that we use in the the gitlab-ci file and you are good to go:

!/bin/bash

export ELASTIC_VERSION=7.10.1

export ELASTICSEARCH_USERNAME=elastic

export ELASTICSEARCH_PASSWORD=changeme

export ELASTICSEARCH_HOST=elasticsearch

export KIBANA_HOST=kibana

1 is PlaceHolder. Gets changed During deploy

export CONF_VERSION=1

docker network ls|grep elastic > /dev/null || docker network create --driver overlay --attachable elastic

docker stack deploy --prune --compose-file docker-compose.yml elkstackHappy dockering!

Recent Comments